It is no doubt that the world has become much more intelligent with the rise of machine learning and artificial intelligence. Models can now see, can hear, and can do things that are traditionally done by humans. When these tasks are mundane, models help liberate human creativity and propel our civilisation forward. However, outsourcing human thinking to models too extensively may lead to catastrophic outcomes.

Jeff Bezos’s remark on how he makes his best decisions with anything but analysis has always stuck with me. This insight is particularly profound, considering Bezos's background as a great financial engineer before Amazon with D. E. Shaw, one of the largest and most prestigious quantitative hedge funds in the US. The best decisions often require human judgment rather than mathematical optimisation or historical pattern extrapolation.

All my best decisions in business and in life have been made with heart, intuition, and guts, not analysis.

When you can make a decision with analysis, you should do so, but it turns out in life that your most important decisions are always made with instinct, intuition, taste, and heart.

As a data scientist, taking a first-principles approach to examine the validity of model-based decision-making is a humbling experience. I realised that models rarely make good decisions, and there are valid reasons for this. Firstly, there are the "sins" of predictions - some predictions are simply infeasible, while others provide too little actionable insight to be useful. Secondly, the mindset behind decision-making shapes the environment, which, in turn, influences the outcomes. The most optimised decisions can end up being suboptimal. Lastly, the rise of models that embed real-world knowledge is particularly dangerous, as they amplify mediocrity.

Predicting the Unpredictable

A significant category of commercial model applications involves predicting the future - such as product adoption, customer responses, and emerging risks. For example, private equity firms attempt to predict consumer brand success based on colors and designs to identify acquisition targets. Record companies use machine learning to predict or even create the next hit song. Risk advisors talk about "proactive" risk management by predicting where risk events may occur. These use cases often attempt to predict rare events with limited reliable history.

Interestingly, despite the hype, there is little independent reporting on the commercial success of prediction modeling. Instead, there are ample evidence-based disappointments, including failures in medium/long-term weather forecasting, financial markets, earthquakes, and even disease prediction. A striking example is how most mainstream medical authorities miscalculated the progression of COVID-19, leading to overly aggressive policies with adverse consequences that still linger today. Bohr's century-old assertion remains remarkably relevant:

Prediction is very difficult, especially about the future.

The main challenges with prediction stem from:

Histories being unreliable due to countless uncontrolled variables.

The rarity of major events, making statistically significant patterns elusive.

For example, in 2016, many tried to predict how the stock market would respond to Trump’s election. With no prior data (Trump had never been elected before), these predictions lacked useful input. Fast forward to 2025—Trump is elected again, but the world has since changed entirely. Historical data from 2016 is, once again, irrelevant.

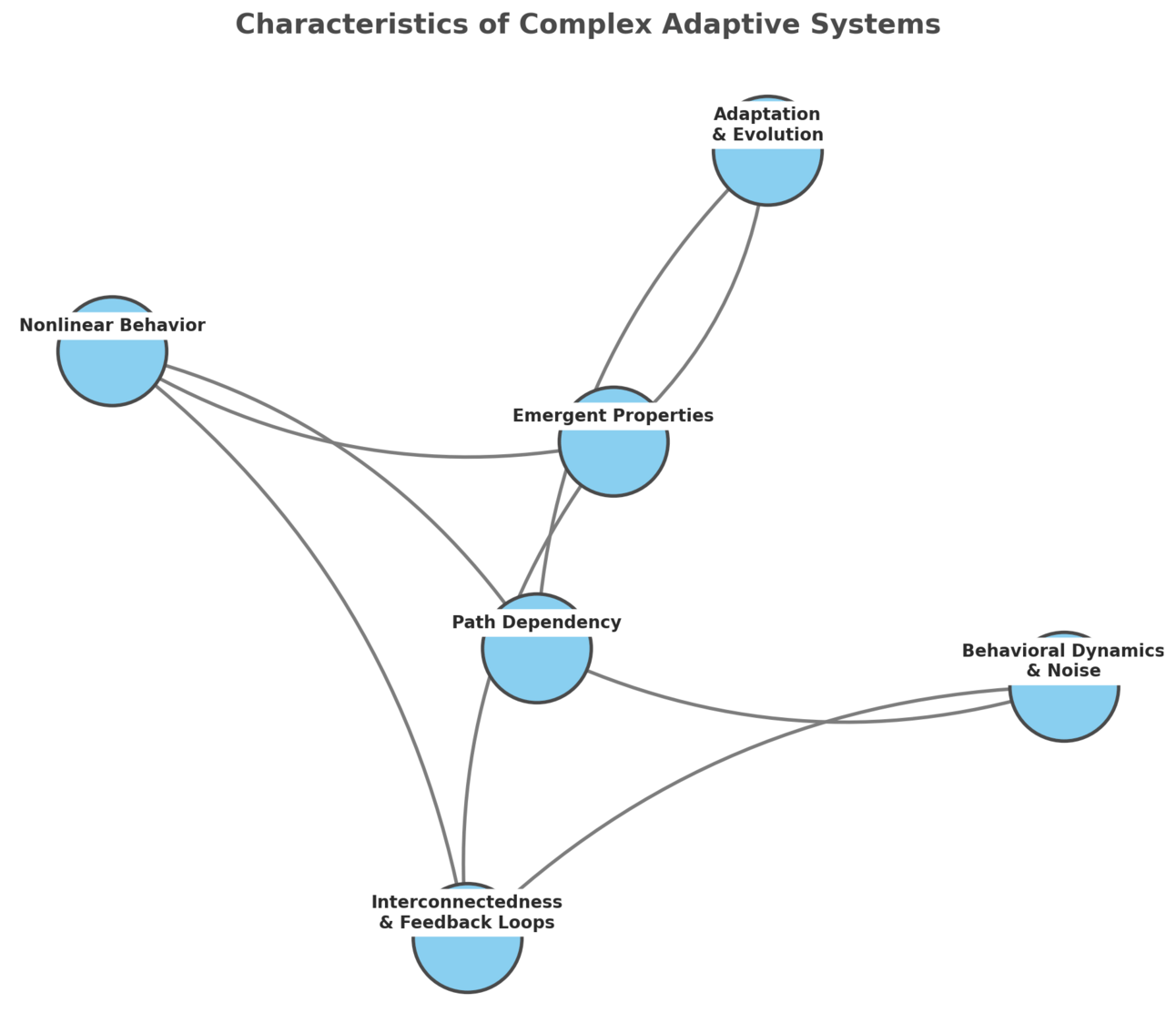

In technical terms, we live in complex systems - dynamic environments where inputs and outcomes constantly evolve. Even in static scenarios, the same inputs can yield different outcomes due to the uncertainties inherent in life-like systems.

Ultimately, large commercial successes rarely stem from theoretical predictions of market responses. Instead, they arise from rapid product iterations based on actual market feedback.

Predictions Without Actionable Insights

When massive behaviors with long-term drivers are involved, predictions become more feasible. Examples include disease prognosis, attrition, and customer preferences with rich historical data. However, accurate predictions do not always lead to good decisions.

Take medical crises, for example, where patient demands often exceed healthcare capacity. Doctors must triage patients into three groups:

Patients who will die regardless of medical attention or not

Patients who will survive regardless of medical attention or not

Patients who can only survive with medical attention, otherwise they will die

Resources should focus on group #3. This logic, while intuitive in medicine, often eludes decision-makers in commercial contexts like customer attrition.

For instance, attrition models predict which customers are at high risk of leaving. Decision-makers may respond in one of two ways:

Offer incentives to high attrition risk customers to retain them

Ignore high attrition risk customers assuming they will leave regardless

Both approaches seem logical but are opposites. The better solution mirrors medical triage: focus only on customers who would leave without intervention.

While techniques like uplift modeling can optimise this, the underlying driver is often product thinking rather than modeling.

Mathematics-Based Decision vs Judgement-Based Decision

Even when predictions are accurate and beneficial, mathematical optimisation can still fall short. Two scenarios illustrate this:

The messages conveyed by decisions.

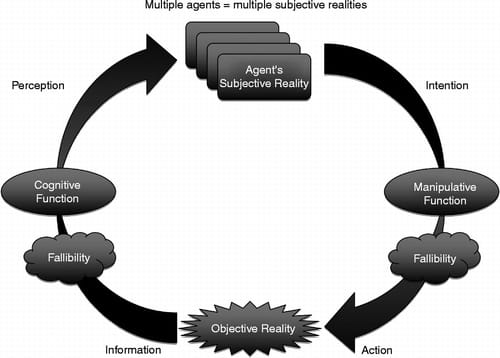

Reflexivity - a phenomenon where actions change the environment itself.

Dynamic pricing exemplifies mathematics-based decision. Platforms like Amazon, Uber, and financial products are in perfect positions to optimise pricing through algorithms. While this yields measurable short-term profits, it can erode long-term relationships.

For instance, when Uber charges $500 for a short ride on a rainy night, the implicit message is purely transactional: “It’s just supply and demand.” However, Jeff Bezos adopts a long-term, judgment-based approach at Amazon:

If you’re long-term oriented, customer interests and shareholder interests align more than you might think.

Bezos’s approach to pricing at Amazon reflects his long-term vision. For Amazon, it is tempting to raise prices when the platform reaches scale, especially since costs decrease due to economies of scale. However, Bezos consistently resisted this temptation. Instead, he reduced prices whenever possible, even at the expense of short-term profits.

His reasoning was simple: customers value low prices and convenience, and these values will not change in the next 10 years. By keeping prices low, Amazon strengthened customer trust, expanded its market, and created a virtuous cycle of dominance. To put this in perspective, Amazon was once a small e-commerce site compared to eBay. Today, Amazon’s market cap is 80 times that of eBay, sitting at $2.471 trillion.

This is a stark example of how judgment-based decisions often trump mathematics-based optimisation.

Reflexivity is another trap.

In competitive markets, mathematical optimisation often triggers destructive cycles. For example, price wars erode profitability for all participants. Counter-intuitive judgment-based interventions that go against data - like halting price wars - can often result in the changes in the market dynamics and help restore the market equilibrium.

Falling Prey to Mediocrity Automatically

While traditional models are susceptible to poor design and misapplication, AI models like large language models (LLMs) introduce an entirely new set of risks. Unlike their rules-based predecessors, LLMs are trained on vast amounts of public data, which allows them to synthesise information in ways that were previously unattainable. On the surface, this seems like a monumental breakthrough. However, it presents significant challenges that can quietly undermine originality, creativity, and decision-making.

One of the most concerning issues with LLMs is the quality and nature of the information they process. The internet acts as an enormous town hall, where the most posted and shared content tends to be viral, sensational, and widely relatable. These characteristics often correlate with lower quality and superficiality. On the other hand, highly nuanced, specialised, or valuable insights are typically classified, unpublished, or deeply buried in inaccessible datasets. As a result, LLMs are disproportionately trained on abundant, low-value content while missing the more rarefied insights that drive groundbreaking ideas.

This dynamic creates a self-reinforcing cycle of mediocrity:

Models amplify what's common and neglect what's rare. Decisions informed by these models often reflect average thinking, which, over time, stifles innovation and deep expertise.

Systemic homogenisation arises. As LLMs are widely adopted across industries, decision-makers increasingly rely on the same synthesised data, reducing diversity in thought and strategy.

This poses an existential challenge to organisations and individuals alike. When everyone uses the same tools, informed by the same datasets, differentiation becomes nearly impossible. Over time, this convergence breeds a systemic risk: mediocrity becomes the norm, and innovation becomes the exception.

In an era dominated by LLMs, originality becomes increasingly valuable and high leverage. Ironically, the massive reliance on AI opens up human originality as the new frontier for innovation.

The End

The rise of models and AI undeniably transforms the way we think, decide, and act. While their benefits are vast, we must tread carefully. Blindly outsourcing human judgment to algorithms risks making suboptimal decisions, eroding relationships, and amplifying mediocrity.

The key lies in retaining the human ability to think critically. Long-term success demands that we, as decision-makers, prioritise originality, judgment, and the courage to challenge conventional wisdom.

In a world increasingly governed by models, it is the distinctly human qualities of vision, empathy, and critical thinking that will continue to shape the future. In fact, originality will be increasingly rewarded in an AI-penetrated era.